Military Grapples with Generative AI Integration

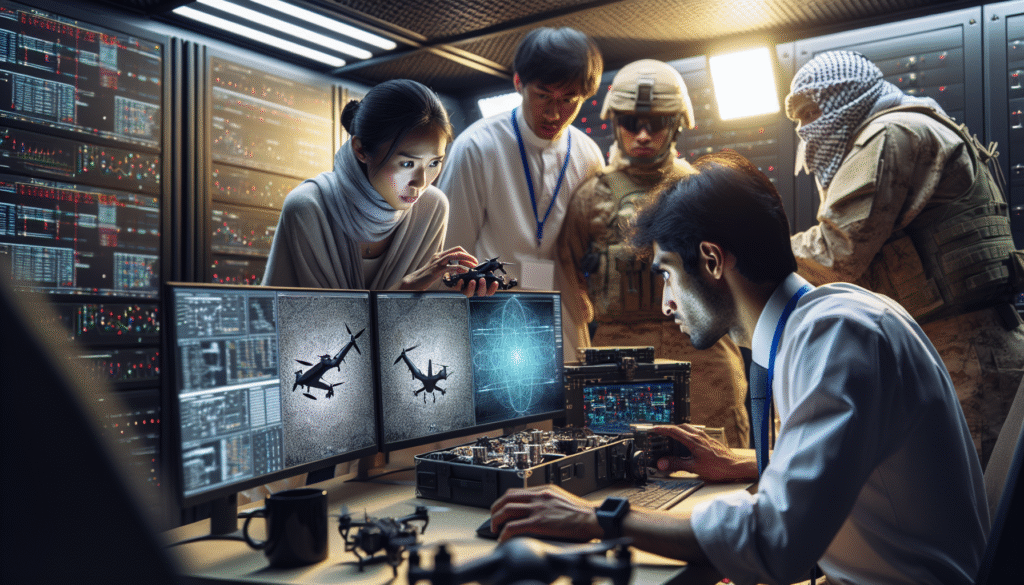

As the U.S. military continues to explore the integration of generative artificial intelligence into daily operations, users across the Department of Defense are expressing a complex mix of enthusiasm, skepticism, and concern, according to a recent report by DefenseScoop titled “GenAI mil users have mixed reactions — and many questions.”

The article sheds light on the Pentagon’s cautious but growing experimentation with generative AI (GenAI) technologies, including tools powered by large language models, within various operational contexts. While defense officials have touted GenAI’s potential to streamline workflows, enhance decision-making, and modernize warfighting capabilities, actual users on the ground—both civilian and uniformed personnel—are encountering a blend of utility and uncertainty.

DefenseScoop’s report highlights feedback collected during early field trials and limited deployments of GenAI tools, such as AI-enabled chat platforms and automated document summarization systems. Some service members report that these tools have made certain administrative processes more efficient, saving time on routine tasks and enabling quicker access to relevant data. Others, however, point to reliability issues and unresolved security concerns that undermine trust in the technology.

One major sticking point for users is the opacity of GenAI decision-making processes. Unlike traditional software systems, which operate within clearly defined rules, GenAI models produce outputs based on probabilities derived from extensive training data. This black-box nature raises concerns about explainability, especially in high-stakes military environments where accountability and predictability are paramount. Users noted a desire for more transparency and control over how these systems generate and filter information.

In addition to concerns about accuracy and dependability, some users are apprehensive about the broader implications of deploying GenAI tools without rigorous policy frameworks. There is worry that overreliance on machine-generated content could introduce novel vulnerabilities, including the unintentional spread of misinformation or biased outcomes. DefenseScoop reports that several early users advised implementing firmer guardrails before any large-scale adoption of these tools.

Despite the ambivalence, DefenseScoop notes that enthusiasm remains among certain innovators within the military’s technology community. Proponents argue that, with proper safeguards, generative AI has the potential to drastically improve productivity and give warfighters a strategic edge. Nonetheless, the article emphasizes that convincing frontline users and mid-level commanders of the value—and safety—of AI remains a pressing challenge for the Department of Defense.

As the Pentagon’s Chief Digital and Artificial Intelligence Office (CDAO) continues to spearhead AI integration efforts, the mixed reactions outlined in DefenseScoop’s article suggest that successful adoption will depend not only on technological breakthroughs but also on robust training, cultural change, and the development of ethical and operational guidelines tailored to the realities of military service.

In the meantime, the DoD appears to be walking a fine line between innovation and caution. Limited pilot programs and sandbox environments have become preferred testing grounds, allowing developers to gather critical user feedback and address issues before any broad-scale implementation. This measured approach reflects the department’s recognition that, in matters of national security, the tools of tomorrow must earn trust today.