AI Chatbot Flaws Pose Growing National Security Risk

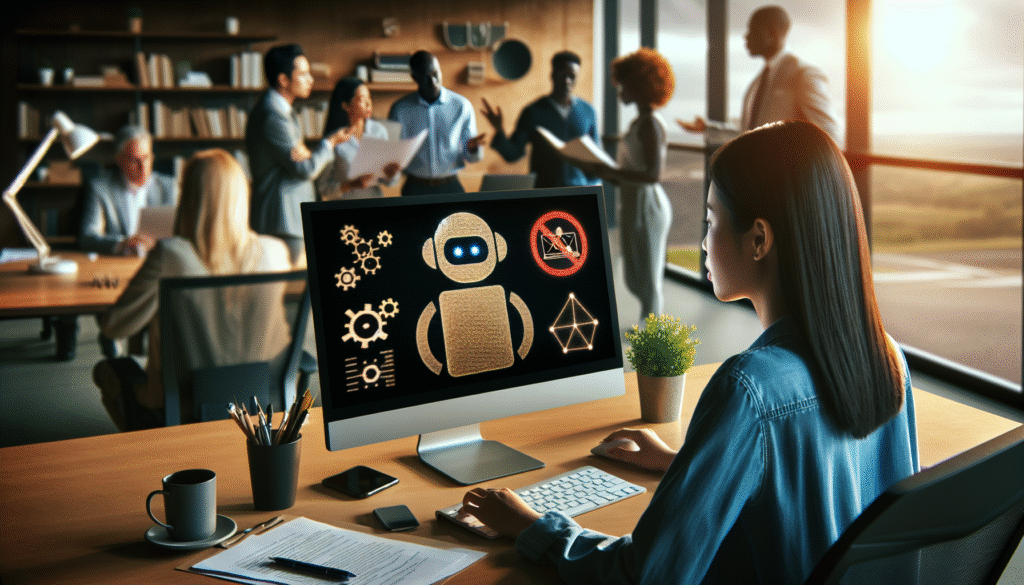

Military and cybersecurity experts are raising alarm bells over a little-known vulnerability in most artificial intelligence chatbots that could have far-reaching national security implications. According to a recent article titled “Military experts warn security hole in most AI chatbots can sow chaos,” published by Defense News, the flaw lies in the ease with which these language-based AI systems can be manipulated through a technique known as prompt injection.

Prompt injection exploits the very mechanism that powers large language models—namely, their ability to process and respond to human language input. By crafting malicious prompts that appear legitimate on the surface, adversaries can potentially trigger unintended behaviors, such as generating misinformation, leaking protected system operations, or even impairing interfacing software. Experts quoted in the Defense News piece warn that these vulnerabilities are not just hypothetical concerns but present an urgent, active threat that bad actors, including nation-states, could weaponize.

The growing integration of AI chatbots within military and defense ecosystems underscores the gravity of the issue. These tools are increasingly relied upon for logistical planning, document generation, and assisting personnel with administrative tasks. If compromised, even mundane-seeming chatbot applications could turn into vectors for disinformation campaigns or more sophisticated forms of cyberwarfare.

Dr. Kiri Reay, a cybersecurity specialist cited in the Defense News report, cautions that existing security frameworks are ill-equipped to handle the inherently open-ended nature of chatbot communications. “You can’t firewall a conversation,” she notes, pointing to the limited effectiveness of traditional software defenses against linguistic exploitation. Current mitigation approaches—such as output filtering and content moderation—are reactive at best and frequently fail to capture the nuances involved in prompt-based manipulation.

At stake is not only operational security but also public trust. The ubiquity of chatbots in both civilian and military life means that any widespread attempt to distort their output could lead to ripple effects across institutions. As geopolitical tensions escalate around AI governance and digital sovereignty, adversarial prompt injection could become a favored tool of disruption, especially given its low cost and relative ease of execution.

Furthermore, the Defense News article highlights a troubling lag between the pace of AI deployment in defense systems and the development of safeguards capable of defending against these new vectors of attack. Pentagon officials acknowledged the concern but offered few details about any formalized protocols to counteract such vulnerabilities. Meanwhile, private-sector developers and researchers continue to iterate rapidly on new models, often prioritizing performance gains over resilience and security features.

A growing chorus of experts is calling on defense agencies and AI companies to collaborate more closely, arguing for standardized testing environments that can simulate and stress-test against prompt injection and similar threats. Without such coordinated efforts, the road ahead could be marked by a steady erosion of trust in AI-based decision-making platforms, both in combat scenarios and broader strategic planning.

As the military and intelligence communities double down on AI-driven innovation, the Defense News article serves as a stark reminder that security must remain a primary concern—not an afterthought. The inherent capabilities of chatbots to interpret and generate humanlike responses are also what make them susceptible to manipulation, and until these structural challenges are effectively addressed, the risk of chaos—whether accidental or engineered—remains palpable.